Event Driven Architecture Pattern

An event-driven architecture uses events to trigger and communicate between decoupled services and is common in modern applications built with microservices. An event is a change in state, or an update, like an item being placed in a shopping cart on an e-commerce website. Events can either carry the state (the item purchased, its price, and a delivery address) or events can be identifiers (a notification that an order was shipped).

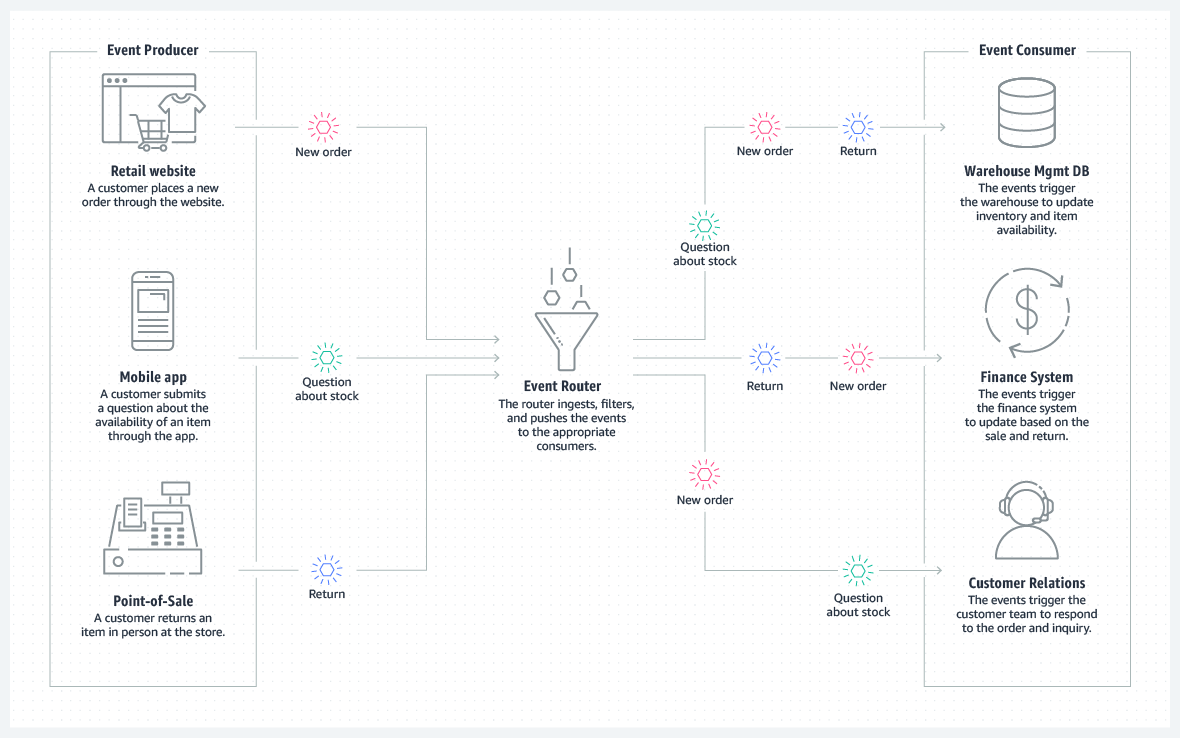

Event-driven architectures have three key components: event producers, event routers, and event consumers. A producer publishes an event to the router, which filters and pushes the events to consumers. Producer services and consumer services are decoupled, which allows them to be scaled, updated, and deployed independently.

What does the term "event" mean in EDA?

A change in state is called an event. In an event-driven architecture, everything that happens within and to your enterprise is an event — customer requests, inventory updates, sensor readings, and so on.

Why should you use an event-driven architecture?

The value of knowing about a given event and being able to react to it degrades over time. The more quickly you can get information about events where they need to be, the more effectively your business can react to opportunities to delight a customer, shift production, and reallocate resources.

That’s why event-driven architecture, which pushes information as events happen, is a better architectural approach than waiting for systems to periodically poll for updates, as is the case with the API-led approach most companies take today.

Event-driven architecture ensures that when an event occurs, information about that event is sent to all of the systems and people that need it. It’s a simple concept, but these events have had quite the journey. They need to efficiently move through a multitude of applications written in many different languages, using different APIs, leveraging different protocols, and arriving at many endpoints such as applications, analytics engines, and user interfaces.

If they don’t get there? Connected “things” can’t connect, the applications and systems you rely on fail, and people across your company can’t react to situations that need their attention.

Benefits of an event-driven architecture

Scale and fail independently

By decoupling your services, they are only aware of the event router, not each other. This means that your services are interoperable, but if one service has a failure, the rest will keep running. The event router acts as an elastic buffer that will accommodate surges in workloads.

Develop with agility

You no longer need to write custom code to poll, filter, and route events; the event router will automatically filter and push events to consumers. The router also removes the need for heavy coordination between producer and consumer services, speeding up your development process.

Audit with ease

An event router acts as a centralized location to audit your application and define policies. These policies can restrict who can publish and subscribe to a router and control which users and resources have permission to access your data. You can also encrypt your events both in transit and at rest.

Cut costs

Event-driven architectures are push-based, so everything happens on-demand as the event presents itself in the router. This way, you’re not paying for continuous polling to check for an event. This means less network bandwidth consumption, less CPU utilization, less idle fleet capacity, and less SSL/TLS handshakes.

Event-Driven Architecture Use Cases

The benefits of event-driven architecture covered above are especially relevant in use cases where a single change can have huge consequences, rippling all the way down the chain. One of the most common questions people have is, “When should you use event-driven architecture?” The answer lies in what you are trying to accomplish with your data.

Businesses looking to take advantage of real-time data in their daily activities are turning to event-driven architecture as the backbone for use cases that can benefit the most. According to a 2021 survey, the top 4 use cases for event-driven architecture were:

Integrating applications

Sharing and democratizing data across applications

Connecting IoT devices for data ingestion and analytics

Event-enabling microservices

Examples of Event-Driven Architecture

The value of event-driven architecture transcends industries and can be applied to small businesses as well as large multinational corporations. Retailers and banks can use it to aggregate data from point-of-sale systems and across distribution networks to execute promotions, optimize inventory, and offer excellent customer service.

Retail and eCommerce: An Example of Event-Driven Architecture

This is an example of event-driven architecture from a retail perspective. Notice that no systems (inventory, finance, or customer support) are polling to ask if there are any new events; they are simply filtered and routed in real-time to the services and applications that have registered their interest.

Common Event-Driven Architecture Concepts That You Should Know

There are eight key architectural concepts that need to be understood for event-driven architecture to be successful:

Event broker

Event portal

Topics

Event mesh

Deferred execution

Eventual consistency

Choreography

Command Query: Responsibility Segregation

Event Broker

An event Broker is a middleware (which can be software, an appliance, or SaaS) that routes events between systems using the publish-subscribe messaging pattern. All applications connect to the event broker, which is responsible for accepting events from senders and delivering them to all systems subscribed to receive them.

It takes good system design and governance to ensure that events end up where they are needed and effective communication between those sending events and those who need to respond. This is where tooling—such as an event portal—can help capture, communicate, document, and govern event-driven architecture.

Event Portal

As organizations look to adopt an event-driven architecture, many are finding it difficult to document the design process and understand the impacts of changes to the system. Event portals let people design, create, discover, catalog, share, visualize, secure, and manage events and event-driven applications. Event portals serve three primary audiences:

Architects use an event portal to define, discuss, and review events, data definitions, and application relationships.

Developers use an event portal to discover, understand, and reuse events across applications, lines of business, and between external organizations.

Data scientists use an event portal to understand event-driven data and discover new insights by combining events.

Topics

Events are tagged with metadata that describes the event, called a “topic.” A topic is a hierarchical text string that describes what’s in the event. Publishers just need to know what topic to send an event to, and the event broker takes care of delivery to systems that need it. Application users can register their interest in events related to a given topic by subscribing to that topic. They can use wildcards to subscribe to a group of topics that have similar topic strings. By using the correct topic taxonomy and subscriptions, you can fulfill two rules of event-driven architecture:

A subscriber should subscribe only to the events it needs. The subscription should do the filtering, not the business logic.

A publisher should only send an event once, to one topic, and the event broker should distribute it to any number of recipients.

Event Mesh

An event mesh is created and enabled through a network of interconnected event brokers. It’s a configurable and dynamic infrastructure layer for distributing events among decoupled applications, cloud services, and devices by dynamically routing events to any application, no matter where these applications are deployed in the world, in any cloud, on-premises, or IoT environment. Technically speaking, an event mesh is a network of interconnected event brokers that share consumer topic subscription information and route messages amongst themselves so they can be passed along to subscribers.

Deferred Execution

If you’re used to REST-based APIs, the concept of deferred execution can be tricky to comprehend. The essence of event-driven architecture is that when you publish an event, you don’t wait for a response. The event broker “holds” (persists) the event until all interested consumers accept or receive it, which may be sometime later. Acting on the original event may then cause other events to be emitted that are similarly persistent.

So event-driven architecture leads to cascades of events that are temporally and functionally independent of each other but occur in a sequence. All we know is that event A will at some point cause something to happen. The execution of the logic-consuming event A isn’t necessarily instant; its execution is deferred.

Eventual Consistency

Following on from this idea of deferred execution, where you expect something to happen later but don’t wait for it, is the idea of eventual consistency. Since you don’t know when an event will be consumed and you’re not waiting for confirmation, you can’t say with certainty that a given database has fully caught up with everything that needs to happen to it and doesn’t know when that will be the case. If you have multiple stateful entities (database, MDM, ERP), you can’t say they will have exactly the same state; you can’t assume they are consistent. However, for a given object, we know that it will become consistent eventually.

Choreography

Deferred execution and eventual consistency lead us to the concept of choreography. To coordinate a sequence of actions being taken by different services, you could choose to introduce a master service dedicated to keeping track of all the other services and taking action if there’s an error. This approach, called orchestration, offers a single point of reference when tracing a problem, but also a single point of failure and a bottleneck.

With event-driven architecture, services are relied upon to understand what to do with an incoming event, frequently generating new events. This leads to a “dance” of individual services doing their own things but, when combined, producing an implicitly coordinated response, hence the term choreography.

CQRS: Command Query Responsibility Segregation

A common way of scaling microservices is to separate the service responsible for doing something (a command) from the service responsible for answering queries. Typically, you have to answer many more queries than for an update or insert, so separating responsibilities this way makes scaling the query service easier.

Using event-driven architecture makes this easy since the topic should contain the verb, so you simply create more instances of the query service and have it listen to the topics with the query verb.

Classification of event-driven architecture

EDA is classified based on the topology between components in the system, including two popular models: Broker topology and Mediator topology .

Now we will learn about these two models as well as the cases where they should and should not be used.

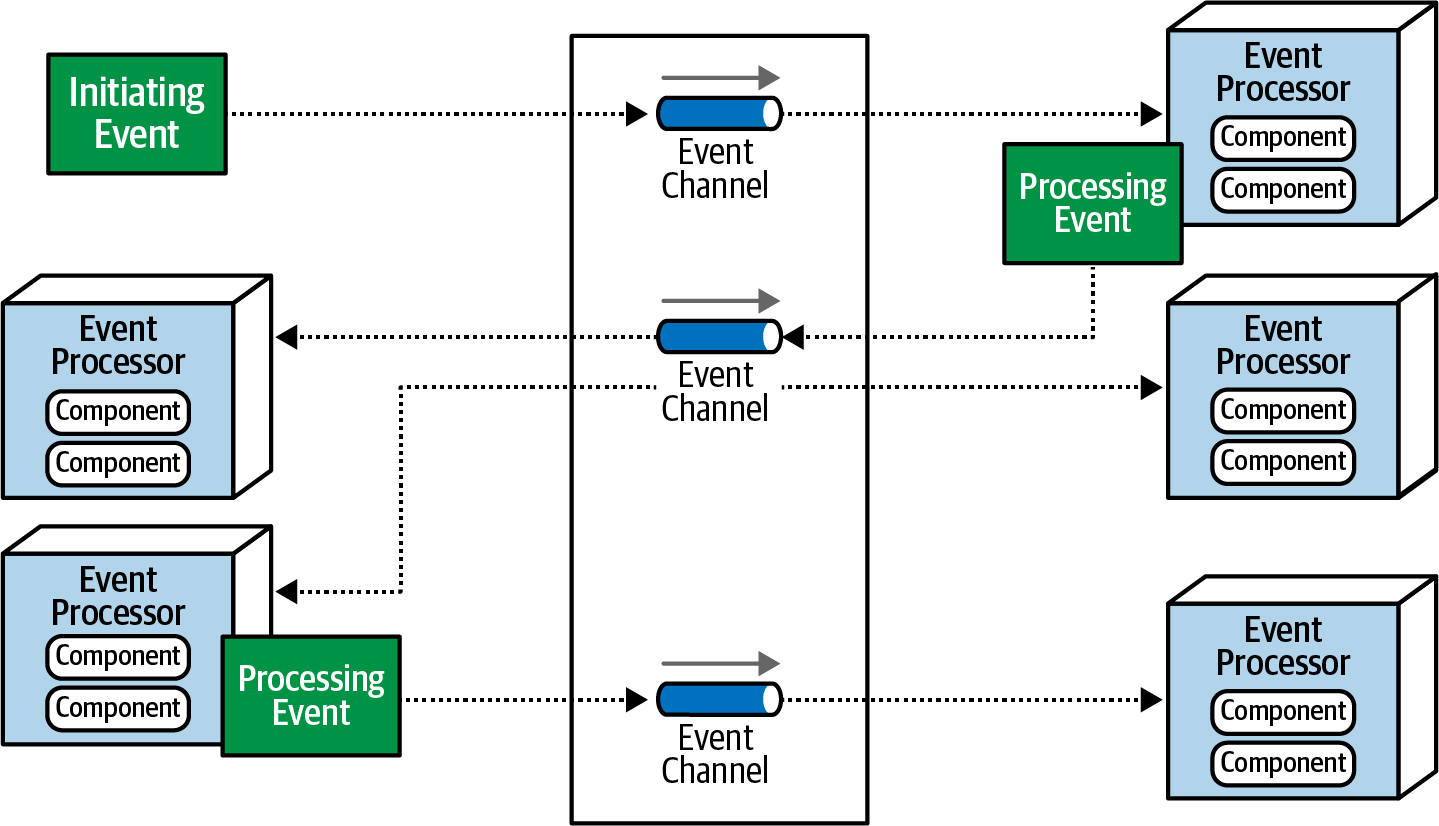

Broker topology

In the Broker topology, the message stream will be distributed evenly to event processors through the message broker without the need for an event coordination center.

Main components of broker topology

The main components of Broker topology include:

Initiating event : the initial event that starts the entire event stream

Event channel : used to store generated events and deliver those events to a service that responds to them. Event channels can be in the form of topics or queues.

Event broker : contains event channels participating in an event stream.

Event processor : service responsible for event processing

Processing event : is an event created when the state of some service changes and sends a notification to the rest of the system about that state change. This event is sent using the fire-and-forget broadcasting mechanism , meaning it is sent and then does not need to be confirmed whether the sending was successful or not .

Message flow in broker topology

The message flow in the broker topology operates in the following order:

An initiating event is sent to an event channel located in the event broker for processing.

An event processor retrieves that event from the event channel to process and perform specific tasks related to processing that event.

When processing is complete, the event processor sends a processing event to the event channel to notify that the event has been processed.

Other event processors will listen to this processing event and react by creating another processing event. This process repeats until the last event processor has finished processing.

Notice in the message flow, when the event is finished processing, the event processor will always send the broker a processing event to notify that it has completed processing, whether they are consumed or not. If an event has no one listening, it will be ignored.

This may seem like a waste of resources, however, in reality, this is a design that ensures system scalability. The reason is that if the system generates a new business and needs this event, you only need to let the Event Processor listen instead of having to edit the logic in the code.

In case you should use broker topology

Need to reduce interdependency (loose coupling) : For systems where components need to operate independently, without knowing about each other, broker topology provides the necessary decoupling capabilities.

Simple routing : event processors communicate with each other using events without going through any management intermediary, so it is suitable for systems with simple routing, without needing to ensure the correct order.

In cases where broker topology should not be used

Handling complex logic between components : If the system requires handling complex logic, transforming data, or coordinating workflows between producers and consumers, the broker topology may not be flexible enough. active. Mediator topology is often more suitable in this case.

Integrate and normalize data from multiple sources : In cases where there is a need to integrate data from various sources with heterogeneous formats and protocols, Broker Topology may not provide enough processing and normalization capabilities data needed.

Need for central control and workflow management : If the system needs a high level of central control over event flow and flow management, Broker topology may not be suitable. Mediator Topology, with its central orchestration and management capabilities, may be a better choice in this case.

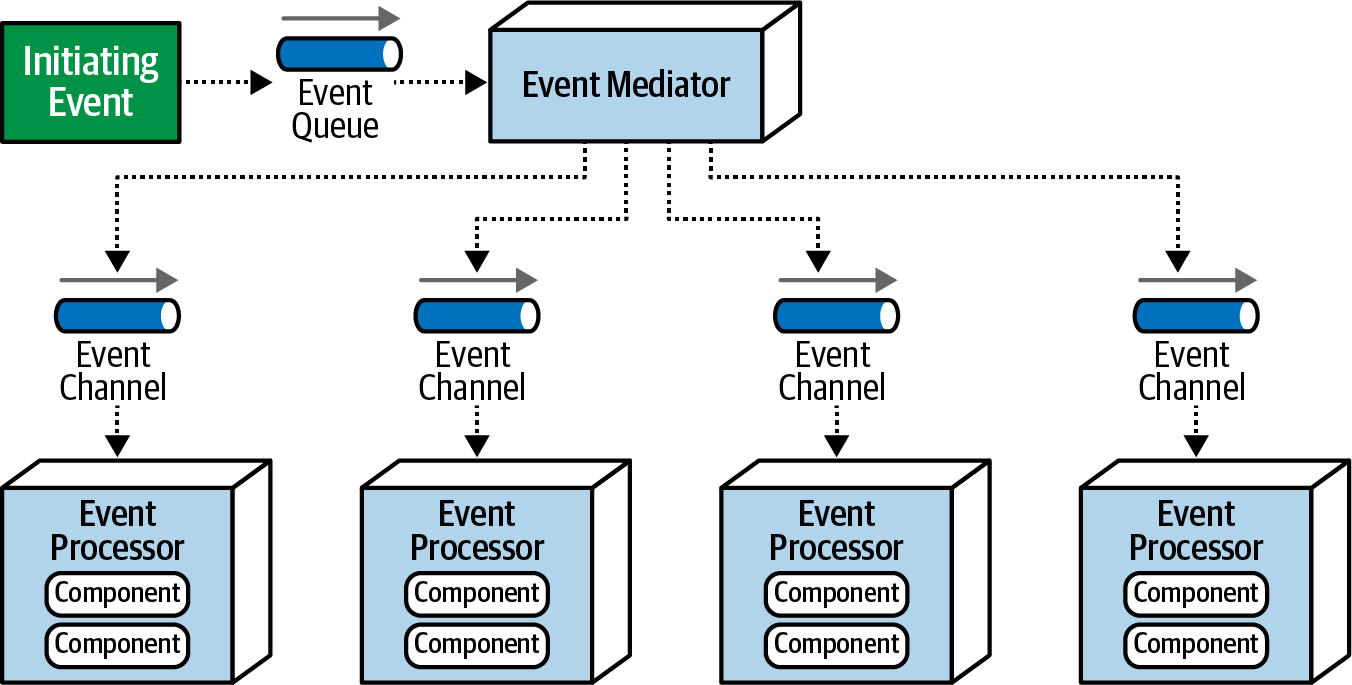

Meditator topology

In the Meditator topology there is an intermediary component, often called a " mediator ", which is used to coordinate and manage the flow of events between different services or components in the system.

The main components of Meditator topology include:

Initiating event : the initial event that starts the entire event stream

Event queue : stores original events, ensuring they are not lost during processing. Queue helps process events sequentially, ensuring consistency before the event is passed to the Meditator.

Event mediator : the place to coordinate and manage the event flow, is the heart of the Meditator model.

Event channel : similar to broker topology

Event processor : similar to broker topology

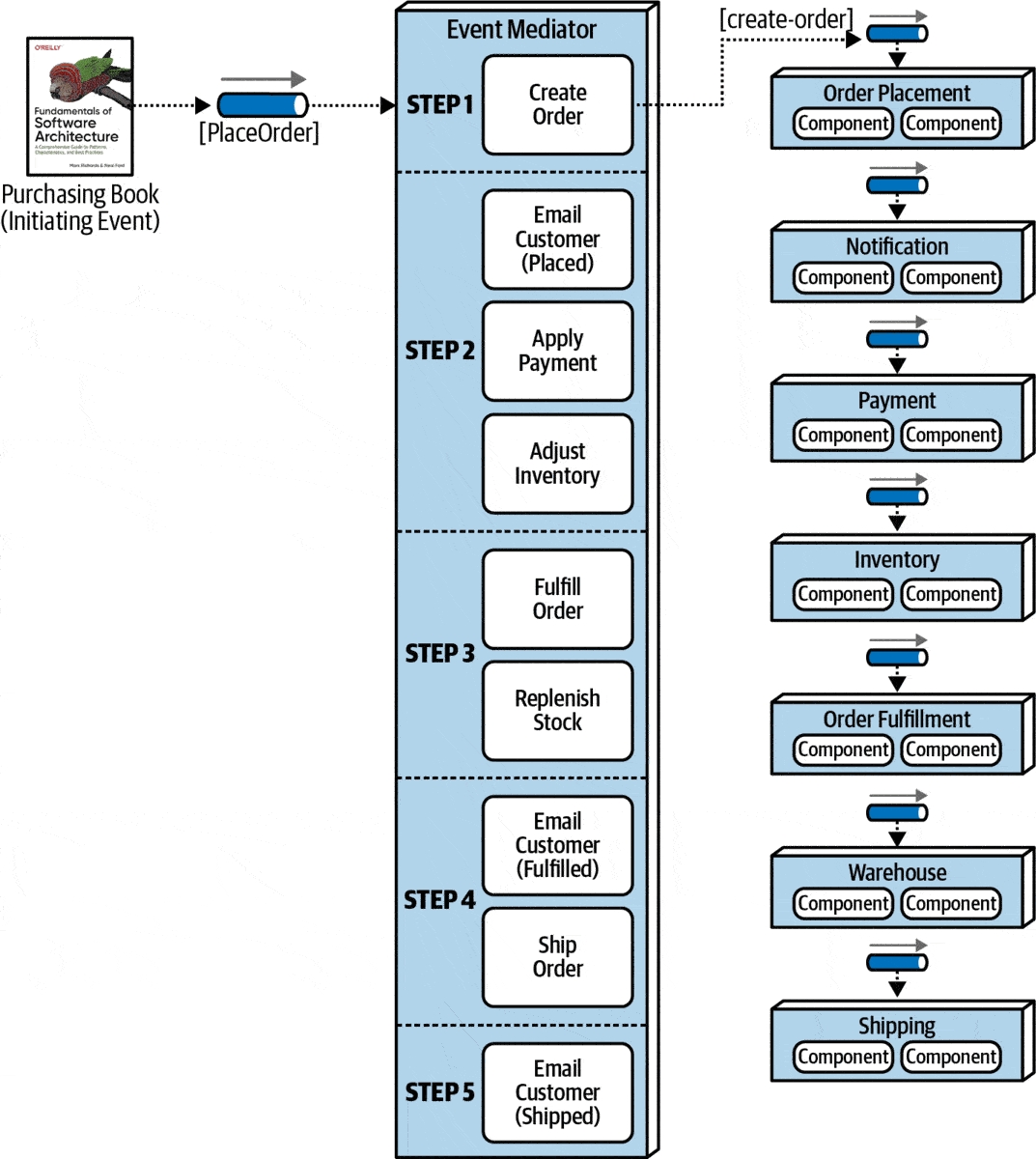

How the Meditator topology works is described through the following retail system example:

Step 1 : Create order

After receiving the initialization event PlaceOrder, the Meditator creates the event create-order, then sends it to order-placementthe queue. OrderPlacementThe event processor will receive this event, validate the information and create an order, returning to the Meditator a notification of completed processing with the order ID (acknowledment). Depending on the specific business, Meditator can send an event back to the client to notify that an order has been created.

Step 2 : Order processing

The Meditator creates and sends 3 events simultaneously, namely email-customer, apply-paymentand adjust-inventoryto the corresponding queues notification, paymentand inventory. The Meditator needs to wait for the event processors to successfully process all events before moving on to step 3.

Step 3 : Make the order

Similar to step 2, the events fulfill-orderand order-stocksent to the corresponding queues are order-fulfillmentand warehouse.

Step 4 : Delivery

The Meditator notifies customers of delivery readiness status by sending events to queues email-customerand .ship-ordernotificationshipping

Step 5 : Notify customers

Notify customers of order status via email using event email-custormer.

In cases where you should use a topology mediator:

Needs centralized management : Suitable for systems that require centralized management, requiring tight integration to ensure events are coordinated and processed in the correct order. If the system requires tight controls on data access and security, a mediator topology can provide an additional layer of control.

Need to handle complex logic : When the system requires advanced logic processing or event transformations before they are sent to the end consumer. Mediators can perform tasks such as filtering, data transformation, or event mapping.

Diverse system integration : When it is necessary to integrate many different systems with heterogeneous protocols and message formats, mediator topology allows data transformation and standardization between systems.

In cases where you should not use the topology mediator:

Requires high performance and low latency : Mediator Topology can introduce additional latency due to intermediate processing. In systems that need extremely fast message processing and low latency, adding a mediator may not be appropriate.

Need for simplicity and extensibility : If the goal is to build a system that is simple, easy to understand, and easy to extend, adding a mediator can add unnecessary complexity.

Separation and independence between components : In systems that require a high degree of separation between components, the use of mediators can create central dependencies, reducing the separability and independence of the components. the components.

Fault tolerance and recovery : Mediator becomes the central point that can cause problems. If the mediator crashes, the entire system can be affected. In systems that need high fault tolerance, relying on a central point may not be the best choice.

Handling data loss in event-driven architecture

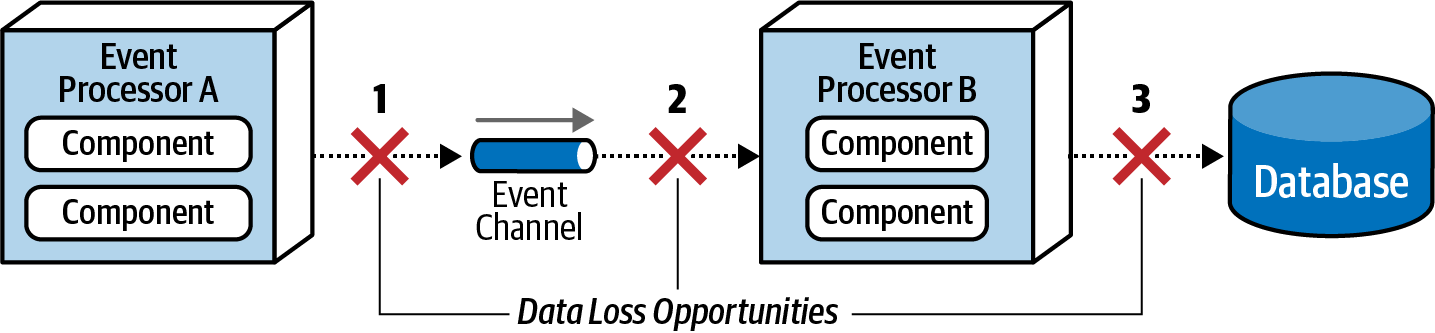

One of the central issues in EDA is data loss . This error may occur at the following times:

Event processor pushes events into Event channel : Broker error causes data to be lost.

Event channel pushes events to Event Processor : Event processor fails before receiving event, causing data loss.

The event processor stores processed data into the database : the data fails to be saved to the database or the database fails to receive the data.

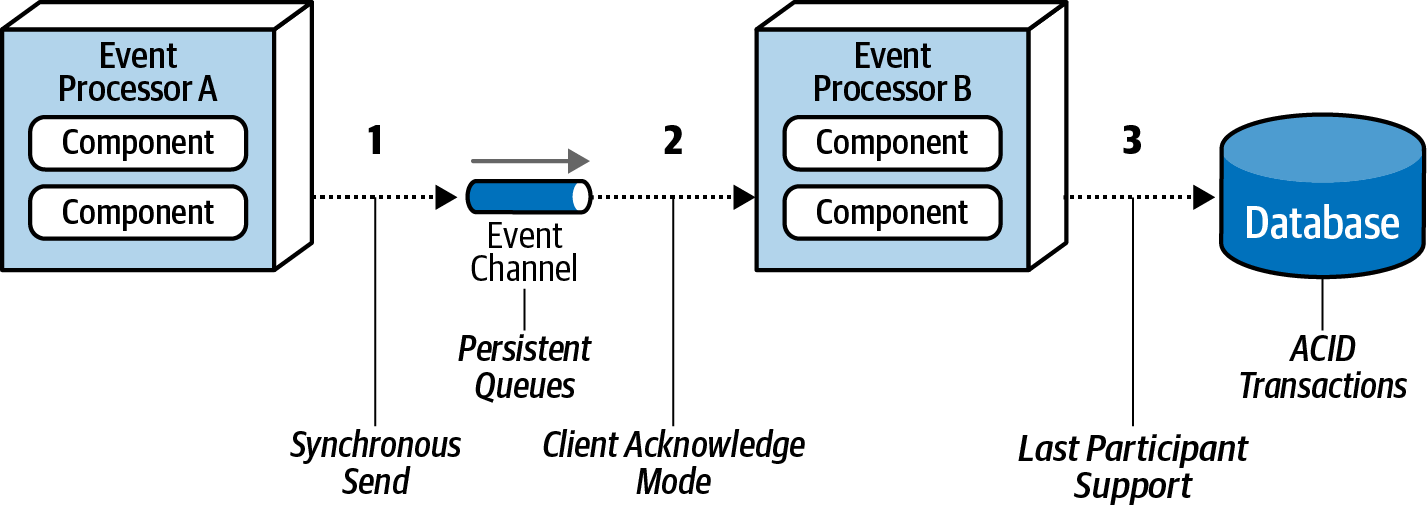

Data loss at times can be resolved by the following measures:

Synchronous send : Implement Persisted message queues that support guaranteed delivery. When receiving an event, the broker will store data both in memory and in a physical storage such as a filesystem or database. Thanks to that, if the broker fails, when it returns to normal operation, it can completely retrieve events from the physical repository for further processing.

Client acknowledge mode : When an event is retrieved from the queue, it is removed from the queue. However, with client acknowledge mode, the event is not removed from the queue and is assigned a client ID, marking it as consumed, so that no consumer can read it. If Event processor A fails while processing event X, then X still exists in the queue, ensuring data is not lost.

Last participant support (LPS) : Failure to save data to the database can be handled by taking advantage of ACID. If an error occurs when the transaction has been committed, the data will be saved. Events are confirmed after data is successfully saved to the database.

The 6 Principles of Event-Driven Architecture

There we have it, some main principles of event-driven architecture:

Use a network of event brokers to make sure the right “things” get the right events.

Use topics to make sure you only send once and only receive what you need.

Use an event portal to design, document, and govern event-driven architecture across internal and external teams.

Use event broker persistence to allow consumers to process events when they’re ready (deferred execution).

Remember, this means not everything is up-to-date (eventual consistency).

Use topics again to separate out different parts of a service (command query responsibility segregation).

Implement with AWS

There are two main types of routers used in event-driven architectures: event buses and event topics. At AWS, we offer Amazon EventBridge to build event buses and Amazon Simple Notification Service (SNS) to build event topics.

Amazon EventBridge is recommended when you want to build an application that reacts to events from SaaS applications, AWS services, or custom applications. EventBridge uses a predefined schema for events and allows you to create rules that are applied across the entire event body to filter before pushing to consumers.

Amazon SNS is recommended when you want to build an application that reacts to high throughput and low latency events published by other applications, microservices, or AWS services, or for applications that need very high fanout (thousands or millions of endpoints). SNS topics are agnostic to the event schema coming through.

Implement with Azure

Service bus

Event Grid

See more:

https://learn.microsoft.com/en-us/azure/architecture/guide/architecture-styles/event-driven

Conclusion

Event-driven architectures are a way of designing systems that make your applications more flexible and able to grow without problems across your business. Although this method can bring some new difficulties and complexities, it’s a good way to create complicated applications by allowing different groups to work on their own. Some cloud services even provide managed tools that can help your organization build these kinds of architectures. This guide explains many concepts about event-driven architectures, suggests some best practices, and mentions useful services to assist your development team in making these architectures. Remember, choosing an event-driven architecture isn’t just about technology. To truly get the benefits, you need to change how you think about development and how your team works. Developers will need a lot of freedom to choose the right technology and design for their specific part of the application.

Last updated