Container

What is Container ? – Docker Container is a standardized unit which can be created on the fly to deploy a particular application or environment. It could be an Ubuntu container, CentOs container, etc. to full-fill the requirement from an operating system point of view. Also, it could be an application oriented container like CakePHP container or a Tomcat-Ubuntu container etc.

Let’s understand it with an example:

A company needs to develop a Java Application. In order to do so the developer will setup an environment with tomcat server installed in it. Once the application is developed, it needs to be tested by the tester. Now the tester will again set up tomcat environment from the scratch to test the application. Once the application testing is done, it will be deployed on the production server. Again the production needs an environment with tomcat installed on it, so that it can host the Java application. If you see the same tomcat environment setup is done thrice. There are some issues that I have listed below with this approach:

1) There is a loss of time and effort.

2) There could be a version mismatch in different setups i.e. the developer & tester may have installed tomcat 7, however the system admin installed tomcat 9 on the production server.

Now, I will show you how Docker container can be used to prevent this loss.

In this case, the developer will create a tomcat docker image ( An Image is nothing but a blueprint to deploy multiple containers of the same configurations ) using a base image like Ubuntu, which is already existing in Docker Hub (the Hub has some base images available for free) . Now this image can be used by the developer, the tester and the system admin to deploy the tomcat environment. This is how this container solves the problem.

I hope you are with me so far into the article . In case you have any further doubts, please feel to leave a comment, I will be glad to help you.

However, now you would think that this can be done using Virtual Machines as well. However, there is catch if you choose to use virtual machine. Let’s see a comparison between the two to understand this better.

Let me take you through the above diagram. Virtual Machine and Docker Container are compared on the following three parameters:

Size – This parameter will compare Virtual Machine & Docker Container on their resource they utilize.

Startup – This parameter will compare on the basis of their boot time.

Integration – This parameter will compare on their ability to integrate with other tools with ease.

Size

The following image explains how Virtual Machine and Docker Container utilize the resources allocated to them.

Docker Certification Training CourseExplore Curriculum

Docker Certification Training CourseExplore Curriculum

Consider a situation depicted in the above image. I have a host system with 16 Gigabytes of RAM and I have to run 3 Virtual Machines on it. To run the Virtual Machines in parallel, I need to divide my RAM among the Virtual Machines. Suppose I allocate it in the following way:

6 GB of RAM to my first VM,

4 GB of RAM to my second VM, and

6 GB to my third VM.

In this case, I will not be left with anymore RAM even though the usage is:

My first VM uses only 4 GB of RAM – Allotted 6 GB – 2 GB Unused & Blocked

My second VM uses only 3 GB of RAM – Allotted 4 GB – 1 GB Unused & Blocked

My third VM uses only 2 GB of RAM – Allotted 6 GB – 4 GB Unused & Blocked

This is because once a chunk of memory is allocated to a Virtual Machine, then that memory is blocked and cannot be re-allocated. I will be wasting 7 GB (2 GB + 1 GB + 4 GB) of RAM in total and thus cannot setup a new Virtual Machine. This is a major issue because RAM is a costly hardware.

So, how can I avoid this problem?

If I use Docker, my CPU will allocates exactly the amount of memory that is required by the Container.

My first container will use only 4 GB of RAM – Allotted 4 GB – 0 GB Unused & Blocked

My second container will use only 3 GB of of RAM – Allotted 3 GB – 0 GB Unused & Blocked

My third container will use only 2 GB of RAM – Allotted 2 GB – 0 GB Unused & Blocked

Since there is no allocated memory (RAM) which is unused, I save 7 GB (16 – 4 – 3 – 2) of RAM by using Docker Container. I can even create additional containers from the leftover RAM and increase my productivity.

So here Docker Container clearly wins over Virtual machine as I can efficiently use my resources as per my need.

Start-Up

When it comes to start-up, Virtual Machine takes a lot of time to boot up because the guest operating system needs to start from scratch, which will then load all the binaries and libraries. This is time consuming and will prove very costly at times when quick startup of applications is needed. In case of Docker Container, since the container runs on your host OS, you can save precious boot-up time. This is a clear advantage over Virtual Machine.

Consider a situation where I want to install two different versions of Ruby on my system. If I use Virtual Machine, I will need to set up 2 different Virtual Machines to run the different versions. Each of these will have its own set of binaries and libraries while running on different guest operating systems. Whereas if I use Docker Container, even though I will be creating 2 different containers where each container will have its own set of binaries and libraries, I will be running them on my host operating system. Running them straight on my Host operating system makes my Docker Containers lightweight and faster.

So Docker Container clearly wins again from Virtual Machine based on Startup parameter.

Now, finally let us consider the final parameter, i.e. Integration.

What about Integration?

Integration of different tools using Virtual Machine maybe possible, but even that possibility comes with a lot of complications.

I can have only a limited number of DevOps tools running in a Virtual Machine. As you can see in the image above, If I want many instances of Jenkins and Puppet, then I would need to spin up many Virtual Machines because each can have only one running instance of these tools. Setting up each VM brings with it, infrastructure problems. I will have the same problem if I decide to setup multiple instances of Ansible, Nagios, Selenium and Git. It will also be a hectic task to configure these tools in every VM.

This is where Docker comes to the rescue. Using Docker Container, we can set up many instances of Jenkins, Puppet, and many more, all running in the same container or running in different containers which can interact with one another by just running a few commands. I can also easily scale up by creating multiple copies of these containers. So configuring them will not be a problem.

To use Docker's utility, the most important and indispensable part along with Images is the Container. We have an image repackaging the necessary tools. So to use the image we need to run the container to use that image.

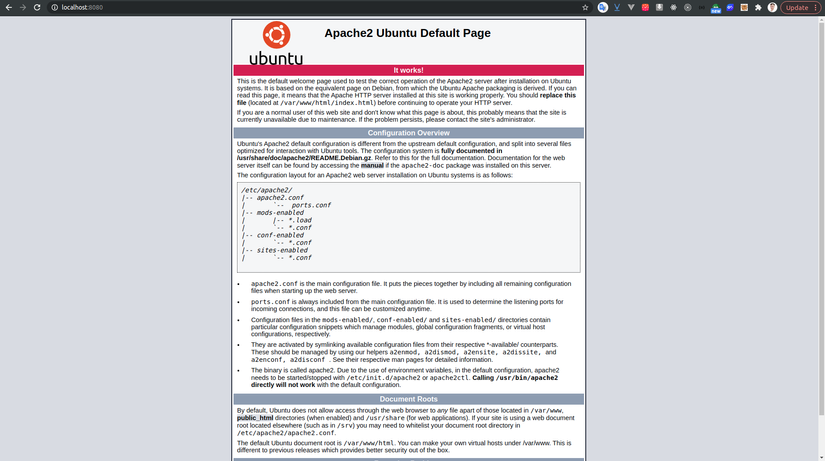

With "apachelinux" being the name of the container we set the option.

-p represents the port so we can configure the post on the real machine and assign it to the container. My container uses the docker-apache2 image just created above with port 80. I map port 8080 outside the real machine (host) to port 80 on docker.

-it : to run containers and use with terminal.

After that, we will be able to access the container using the ubuntu image and try running it service apache2 startand get the results:

Note: while in the terminal, if you type exit, the container will stop. So if you want to exit the terminal but the container is still running, press Ctrl + p, Ctrl + q.

To see running containers we use:

$ docker ps

View all running and stopped containers

$ docker ps -a

The container is running, execute the command and run into that container using:

For example: open the terminal when there is a started container:

Delete a container:

Delete an image:

||

With tag -f: you can delete a container or image even if the container or image has a running container.

Last updated